In Vertica 10.0, WOS (Write Optimized Store) will be deprecated. In this blog, we introduce the historical justification for WOS and its purpose, why we believe that it’s no longer needed, and our justification for deprecating it. We’ll go over the data and the testing we performed that gives us the confidence to ensure that we can safely deprecate this functionality with little or no impact to our end-user base. We’ll also share how you can test this on a Vertica 9.3 database to check the impact on your environment.

What Is WOS and Why did We Build it?

What is WOS?

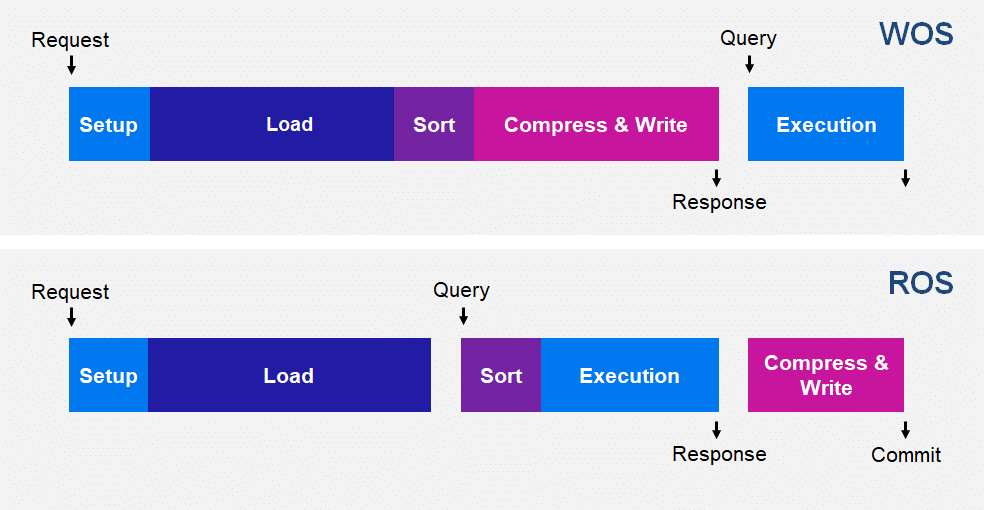

Write Optimized Store (WOS) is a memory-resident data structure for short-term data storage.

What is the purpose of WOS?

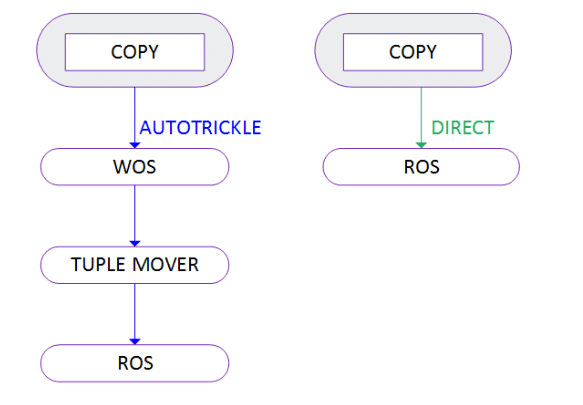

WOS is typically accessed by data manipulation language (DML) statements that load or remove data, like INSERT/COPY/UPDATE/DELETE, to facilitate data loads for very small amounts of data. WOS stores records without data compression, sorting, or indexing. Data in WOS is sorted “just in time”, when and only when it is queried. WOS organizes data by epoch and holds both committed and uncommitted transaction data.

Why did Vertica build WOS?

WOS was created to minimize the impact, overhead, and performance penalty of loading really small amounts of data in each commit.

For detailed information, see Understanding ROS and WOS: A Hybrid Data Storage Model

- Vertica is a columnar database. It creates a separate file for each column in a table. If a user’s DML workload consists of very small amounts data, the number of files created could quickly get very large. This would result in ROS push back. WOS was used to provide a batching effect, where the data would be stored in memory as it was loaded and only periodically flushed to disk.

- Sorting, compression and indexing small amounts of data is inefficient and does not scale well. It made sense to accumulate these in memory and create one large batch to gain processing efficiencies. Writing smaller files was inefficient from a Linux file system; conversely, creating a really large number of small files in a directory caused performance issues (especially in an ext2 file system, where performance problems start after 10,000 files in a directory).

- Compression and encoding provide significantly more value as the amount of data increases. There is minimal value in encoding and compressing a single row of data.

- The Linux kernel and the Vertica database process allocate some minimum buffer sizes to process any file. Really small files impose a memory overhead, since these buffers in memory can exceed the file size. Each file descriptor requires 1MB memory. Read-ahead buffers can be 2M. If a file is few hundred bytes in size, it imposes a significant memory overhead in order to be processed.) In the early days when most Vertica nodes had about 8 to 16 CPUs and 32 GB memory, the WOS enabled the Vertica process to utilize system resources more efficiently, thus keeping resources available for querying.

What is Batching Factor?

Batching factor is a synthetic measure described as the number of commits divided by the number of files written to disk. It is used to describe the effectiveness of the WOS.

If the batching factor is 1, then for every commit you are getting a single file/ROS written to disk. This means that WOS is not really providing any batching and is not really useful.

If the batching factor is 100, then the WOS is reducing the number of files being created by a factor of 100, that is, WOS is very effective. But for WOS we would have had 100 times more files on disk.

Reasons for Deprecating WOS

System Limitations No Longer Exist

Some of the original system limitation that lead to the creation of WOS do not exist anymore.

- The ext4 file systems can support several billion files in a directory with minimal if any performance impact.

- Computers have significantly more cores that they used to have. A Typical Vertica node can now have 32-96 cores. In the past, assigning 3 of 8 CPUs to the Tuple Mover to merge small files was more than 30% of system CPU. Today, assigning 7 threads to the Tuple Mover to merge small files will only be 15% of the overall resources.

- In older versions of Vertica the Tuple Mover got 200 MB of memory by default. Newer versions of Vertica assign 5% of the total system memory of the node to the Tuple Mover; this can be as much as 6 GB or more (on machines that have 128 GB RAM).

- At a basic level, reading and writing data to disk is much faster in modern hardware (RAID cache, SSD, NVME etc.). Consequently, MergeOut operations perform significantly better and faster than on traditional magnetic storage.

Vertica Enhancements Obviate the Need for WOS

We’ve made many enhancements to Vertica that remove the need for WOS.

- Vertica implemented Storage Containers and ROS Bundling in 7.2 to prevent proliferation of small files. This feature is currently turned on by default. See ROS Bundling.

- Previously we allocated memory for MergeOut based on the defined size of a tuple/row of data. Currently we only allocate memory based on the actual size of the tuple/data. This has made MergeOut much faster specially for merging wide and flattened tables where the data rarely spans the entire width of the table.

- The Tuple Mover now does Reflexive Mergeout (see Reflexive Mergeout). In the past the Tuple Mover would periodically consolidate small files. Hence there was a risk of accumulation of a large number of small files in between two sequential checks. This would result in ROS Pushback. With reflexive MergeOut, the check is not periodic anymore, rather it is continuous and targeted only to the projections that actually need MergeOut. Hence this risk has been reduced.

- The Tuple Mover used to have 3 threads and 200 MB memory by default, One thread for WOS MoveOut and two threads for ROS MergeOut. Newer version have 7 threads and 5% of the system memory by default dedicated to MergeOuts.

Customer Usage and Data Analytics Suggest Minimal Use of WOS

We realized that WOS does not help workloads that continuously load small batches into multiple tables. In such scenarios, WOS gets full fairly quickly and thereafter all load is direct to ROS. Hence the proliferation of numerous small files continues unabated. It really only helps workloads that load in bursts, allowing a reasonable time for the WOS to flush to disk in between such bursts. Customers that have the flexibility to load in large batches generally prefer to do so. Customers that do not have the flexibility to load in large batches load continuously and pretty soon overwhelm WOS and cease to get the benefit. (i.e. the batching factor would quickly drop of to 1 and stay there till there for a brief pause in load).

We analyzed data loads across 4 million distinct tables across 500 customer accounts.

- For 80% of the tables the batching factor was 1. i.e. data loads into those tables did not benefit from WOS.

- For 10% of the tables (400K tables) the batching factor was 2 (here the benefit was marginal at best).

- Only 10% of the tables utilized the WOS effectively for data loads.

WOS is Not Durable

WOS is an in-memory data structure, and is permanently lost in the event of an abnormal shutdown (like a crash). If a database as a whole crashes, data in memory is lost; this can include committed transactions, since the Tuple Mover may not have flushed these transaction to disk yet. Hence, ASR (Administrator Supported Recovery) is required, which can result in partial data loss. Removing WOS improves the robustness of the system and greatly simplifies the need for node recovery by eliminating this source of data loss. This becomes especially important in the cloud (Eon mode), where nodes/instances are provisioned and removed rapidly, or when the cloud provider may evict an instance (like a spot instance). The WOS in such a situation will cause data loss. Hence, in an Eon mode Vertica database there is no WOS.

WOS Increases Vertica Process Memory Size (RSS)

WOS data structure consumes memory and takes away from available memory for query workload. The size of WOS directly adds to the Vertica process Resident Memory Size.

WOS Degrades Query Performance

Due to the benefits of sorting and compression, and to avoid large joins, Vertica users prefer wide tables (tables with lots of columns, or table with very large columns). When such tables have large amounts of data in WOS, the queries on these tables have to sort this data at run time. Sorting wide tables at run time inflicts a significant performance penalty on these queries. We see that for wide table queries, performance degrades with the amount of data in WOS.

WOS is an Obstacle to Identical Storage which Complicates Node Recovery

Vertica stores two copies of data. However these copies of data on disk/files are not identical because of WOS. As they may have been batched differently on each of the nodes that stores the individual copy. Consequently efficiencies of having identical storage cannot be realized for the purposes of node recovery, reorganization, refresh and MergeOuts. The longest and most unpredictable phase of node recovery is the Replay Delete Phase. This phase is necessary since the storage is not identical and deletes have to be replayed to get the data into its final and correct state on the recovering node. A lot of this complexity can be entirely eliminated by removing WOS. Without WOS, we can assume identical storage and recover any node trivially by simply moving data files from the buddy node to the recovering node. This will considerably speed up node recovery and make it more predictable and robust.

WOS Makes the DBA’s Job Harder

In order to get the most out of WOS it has to be constantly tuned based on a changing workload. This is specially difficult in case of multi-user workloads. Database administrators had to monitor WOS usages and manage the configuration parameters at different (system, table, workload) levels . Customer workloads have grown significantly and are constantly changing and this is no longer a sustainable method to manage the database. The elimination of the WOS will simplify the management of the vertica cluster.

Testing Your Workload

In Vertica 9.3, we provided a configuration parameter to disable the WOS. This was done to allow user to execute their workload without the WOS.

We recommend you turn off the WOS in Vertica 9.3 using the following steps.

dbadmin=> select set_config_parameter('DMLTargetDirect' , 1);set_config_parameter----------------------------Parameter set successfully(1 row)

dbadmin=> alter resource pool wosdata memorysize '0K';ALTER RESOURCE POOL

dbadmin=> alter resource pool tm maxmemorysize '5%' maxconcurrency 7 plannedconcurrency 6;ALTER RESOURCE POOL

FAQs

Will high concurrency result in ROS Pushback?

The short answer is no. We tested 60 concurrent processes performing continuous 200KB file loads, split evenly across target table widths from 10 to 1500 columns. Over a four-day continuous load test, no ROS pushbacks were experienced. The Tuple Mover was able to keep up with Merge-Outs.

Related Posts:

Direct is Now the Default Load Type