While taking a long-awaited, and, IMHO some well-deserved R&R in the sun, I heard that ping notification coming from my backpack, and not being one who can ignore such things, reached for my iPad.

With my OOTO email response already set, I should be able to ignore most incoming messages, texts and otherwise, safe in the knowledge that the Vertica Academy Team will pick up anything that is sent to one of our distribution lists. If it’s urgent, the caller will have my mobile number and can reach me. Or it can simply wait until my return. It’s taken me over four decades to get to a point where I can almost “let go.” But very occasionally something will pop up that I cannot resist opening.

This email came from a relatively new acquaintance who has recently joined one of the companies I am working with – a Data Science Engineer at Jaguar TCS Racing (Formula E).

What caught my eye was the opening question. “Hi Mark. Just wondering what it costs to run queries in Vertica?”.

She went on to say: “I know Google BigQuery very well and being billed on the data retrieved for each query, I have previously set up data extracts from BigQuery into Tableau, so that we can reduce the financial cost of running queries”.

I was absolutely flabbergasted.

Being a passing user of Tableau (one of the ~100 technology tools that interact seamlessly with Vertica), I was aware of its two modes of operation: “Live Connections” and “Extracts.” I must admit, other than guessing what each was, I did not know much about the background to these and had always opted for the “Live Connections” option.

Time to read the documentation!

As with so many things in Vertica, our documentation, blogs, and white papers are second to none. As expected, searching for “T” under Technology Partner Integrations did not disappoint, with Connection Guides, Tips and Techniques, QuickStart Guide, and Partner QuickStart Guide — all for Tableau.

Clicking on Tips and Techniques, as well as a section on “Live Connections and Extracts” (which I will come back to in a moment), I find that Tableau also supports:

- Parallel query processing

- Building a Tableau Data Source by dragging and dropping tables instead of writing custom SQL

- Join culling

- Checking for well-constructed joins

- Adding or excluding columns

All designed for ensuring optimal performance when using Tableau with Vertica, and clearly something I needed to look deeper in to.

Coming back to the “Live Connections and Extracts,” I had already guessed much of what this entails, but for completeness, I decided to repeat some of what is documented here:

After you connect to Vertica, you can maintain a live connection, or you can create a data extract. A data extract is data from Vertica that you store locally in Tableau.

We recommend that you use a live connection when:

- The volume of data is not conducive to creating an extract.

- You need to leverage the analytic capabilities of Vertica.

- Your workbook uses pass-through RAWSQL functions.

- You need near real-time analysis.

- You need robust user-level security.

Use data extracts only when:

- You need offline access to the data.

- Many users are accessing a single workbook with data that is not needed in real time.

This very much comes back to what we talk about so often in Vertica. With very few exceptions, Vertica is being used to analyse large volumes of data. We are not talking about an Excel spreadsheet, or an Oracle database of maybe a few hundred GB of data. Most users of Vertica are analysing 10s or 100s of TBs of data. Many are analysing PBs of data. Such data volumes make it impractical, or even impossible, to extract data out of a core database into a 3rd-party tool (such as Tableau) to perform the analytics – never mind the time it takes to do so.

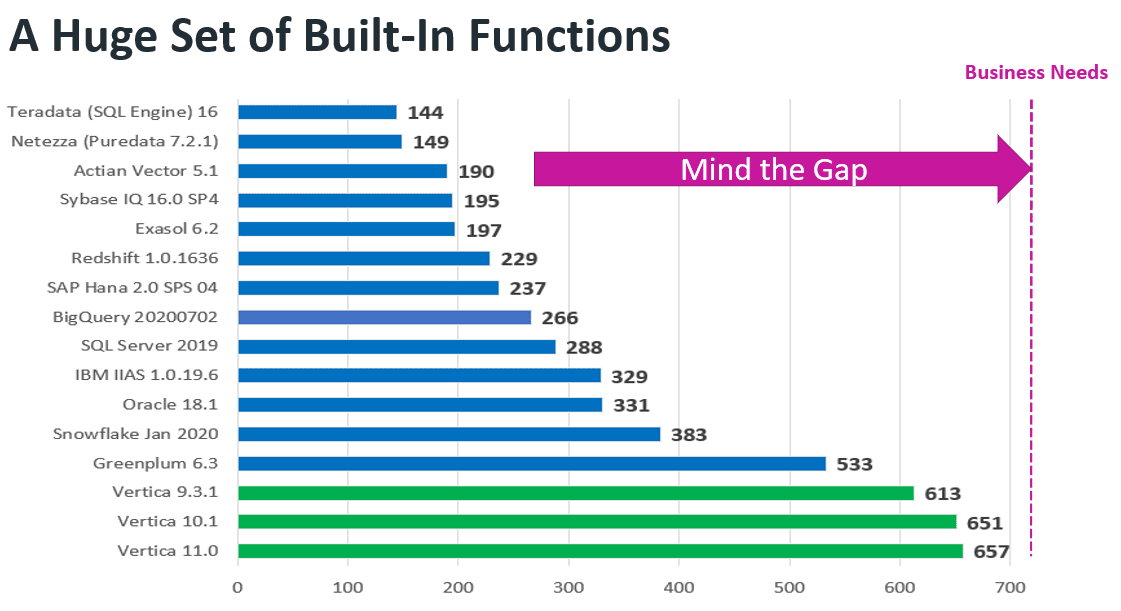

This is one of the reasons Vertica has worked tirelessly to ensure it has more in-database functions than any other analytical database out there. With Vertica’s nearly 700 in-database functions, there is no need to move data out of Vertica to analyse it in another tool. You simply move the processing to the data – not the other way round.

If you happen to come up with a business requirement for a function that does not exist in the pool of nearly 700, not a problem. Write your own!

Vertica has an extremely flexible and extensible framework for developing your own User-Defined Extensions (aka UDx). Written in Python, C++, Java, or R, the functions you develop site inside the database, and can be called upon like any of the other ~700 in-database SQL functions.

Come to think of it, we have already done this with Jaguar TCS Racing. They needed a lowpass filter. After scratching our heads for a few moments, we had to admit to not having one. We got close to providing what they were looking for by using outlier detection, timeseries and conditional true functions, but it didn’t quite cut it for them.

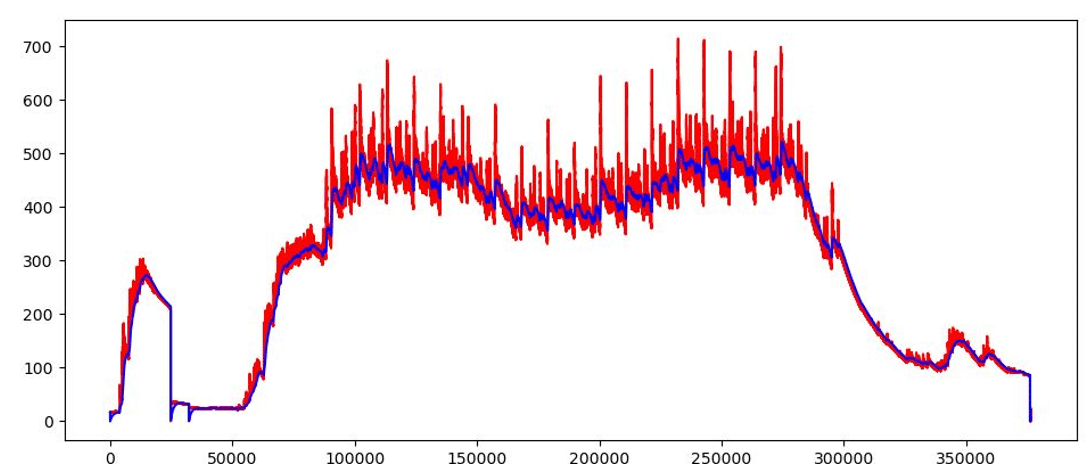

What they were looking for were ways to filter the frequency content above or below some definable threshold of a signal, and thus to remove “noise” from a signal.

Here the red line represents the temperature of a brake disk, and the blue line shows what it looks like when the noise is removed.

There was nothing to do but to write own User Defined Extension.

Sure enough, with just a few lines of Python code, we had a custom-build UDx deployed in their database, providing them with just what they needed.

As for needing real-time analytics? We are living in the 2020s; this is not the turn of the 21st Century! Long gone are “batch reporting,” queries that take hours or days to be prepared, or analytics based on stagnant, out-of-date data. Businesses demand real-time analytics on live data!

But let me get back to the original question: about the reason for opting for “Extracts” from Google Big Query to feed into Tableau. Sure, some technologies out there (and I will not be so unprofessional as to name them) are so damn slow at running analytics queries. They do not have the breadth of in-database functions that Vertica has, or are too complicated and convoluted to use.

When your database technology charges for each query you run against the database, you might naturally choose to look for alternative solutions.

This also reminds me of when I published my Perfect Vision 20/20 – Vertica’s 20 coolest features to see in 2020. Although I only had a meagre 1500 views (I am clearly no “Baby Shark Dance with 10bn views), I loved one of the comments:

Why is Google Big Query missing in the comparison #9 “A huge set of built-in functions”? Google Big Query has also Machine Learning stuff inside their data warehouse and supports the SQL Standard 2011.

For which my reply was:

The reason Google Big Query was missing, was at the time I published this, we had not done a comparison of it. However, we have now done this (BigQuery 20200702), and it comes in with 266 in-database functions. This puts it behind SQL Server (288), IBM IIAS (329), Oracle (331), Snowflake (383), Greenplum (533) and way behind Vertica 10.0 coming in at 646. Though it did rank higher than Redshift, Exasol, Sybase IQ, Actian Vector, Netezza Puredata and Teradata.

Sadly, the author of the comment did not come back with his retort.

This all comes back to what I said above: You do not have to extract data from your database to do your analytics!