Cheryl Bradstreet

June 28, 2021

Vertica Blog

ETL

Vertica and Attunity Replicate Change Data Capture

Amrita Akshay, Information Developer

June 15, 2020

Practice Makes Perfect at BDC 2020

Jeff Healey, Vice President of Marketing, Vertica Product Group in Micro Focus

February 19, 2020

Vertica Partners Are the Key to Your Success at BDC 2020

Jeremiah Morrow, Senior Industry Product Marketing Manager

January 8, 2020

DIRECT Is Now the Default Load Type

James Knicely, Vertica Field Chief Technologist

October 19, 2019

Fast Data Loading with Vertica

Phil Molea, Sr. Information Developer, Vertica

August 20, 2018

What are your Data Loading Preferences?

Soniya Shah, Information Developer

July 30, 2018

Soniya Shah, Information Developer

June 14, 2018

What Should I do if my Node Recovery is Slow?

Soniya Shah, Information Developer

June 14, 2018

Sizing Your Vertica Cluster for an Eon Mode Database

Soniya Shah, Information Developer

May 15, 2018

Handling Duplicate Records in Input Data Streams

Soniya Shah, Information Developer

April 17, 2018

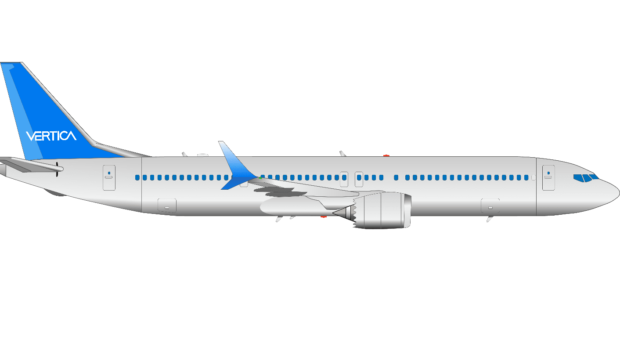

Blog Post Series: Using Vertica to Track Commercial Aircraft in near Real-Time – Part 6

Mark Whalley, Manager, Vertica Education

March 27, 2018