Containerized Vertica on Kubernetes

Kubernetes is an open-source container orchestration platform that automatically manages infrastructure resources and schedules tasks for containerized applications at scale. Kubernetes achieves automation with a declarative model that decouples the application from the infrastructure. The administrator provides Kubernetes the desired state of an application, and Kubernetes deploys the application and works to maintain its desired state. This frees the administrator to update the application as business needs evolve, without worrying about the implementation details.

An application consists of resources, which are stateful objects that you create from Kubernetes resource types. Kubernetes provides access to resource types through the Kubernetes API, an HTTP API that exposes resource types as endpoints. The most common way to create a resource is with a YAML-formatted manifest file that defines the desired state of the resource. You use the kubectl command line tool to request a resource instance of that type from the Kubernetes API. In addition to the default resource types, you can extend the Kubernetes API and define your own resource types as a Custom Resource Definition (CRD).

To manage the infrastructure, Kubernetes divides the hosts into master and worker nodes. Master nodes manage the control plane, a collection of services and controllers that maintain the desired state of Kubernetes objects and schedule tasks on worker nodes. Worker nodes complete tasks that the control plane assigns. Just as you can create a CRD to extend the Kubernetes API, you can create a custom controller that maintains the state of your custom resources (CR) created from the CRD.

Vertica Custom Resource Definition and Custom Controller

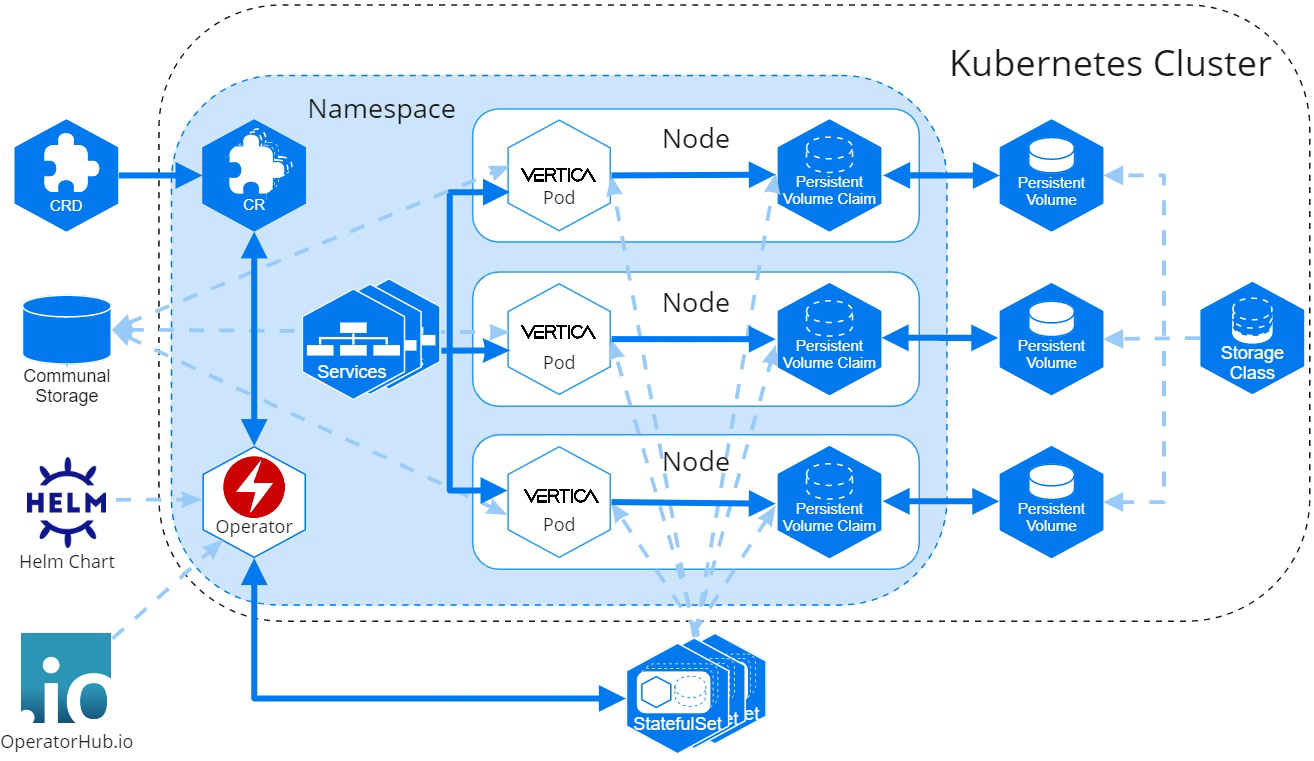

The Vertica CRD extends the Kubernetes API so that you can create custom resources that deploy an Eon Mode database as a StatefulSet. In addition, Vertica provides the VerticaDB operator, a custom controller that maintains the desired state of your CR and automates life cycle tasks. The result is a self-healing, highly-available, and scalable Eon Mode database that requires minimal manual intervention.

To simplify deployment, Vertica packages the CRD and the operator in Helm charts. A Helm chart bundles manifest files into a single package to create multiple resource type objects with a single command.

Custom Resource Definition Architecture

The Vertica CRD creates a StatefulSet, a workload resource type that persists data with ephemeral Kubernetes objects. The following diagram describes the Vertica CRD architecture:

VerticaDB Operator

The operator is a namespace-scoped custom controller that maintains the state of custom objects and automates administrator tasks. The operator watches objects and compares their current state to the desired state declared in the custom resource. When the current state does not match the desired state, the operator works to restore the objects to the desired state.

In addition to state maintenance, the operator:

- Installs Vertica

- Creates an Eon Mode database

- Upgrades Vertica

- Revives an existing Eon Mode database

- Restarts and reschedules DOWN pods

- Scales subclusters

- Manages services for pods

- Monitors pod health

- Handles load balancing for internal and external traffic

To validate changes to the custom resource, the operator queries the admission controller, a webhook that provides rules for mutable states in a custom resource.

Vertica provides a Helm chart that contains the operator and the admission controller. For details about installing the operator and the admission controller, see Installing the VerticaDB Operator.

Vertica Pod

A pod is essentially a wrapper around one or more logically-grouped containers. These containers consume the host node resources in a shared execution environment. In addition to sharing resources, a pod extends the container to interact with Kubernetes services. For example, you can assign labels to associate pods to other objects, and you can implement affinity rules to schedule pods on specific host nodes.

DNS names provide continuity between pod life cycles. Each pod is assigned an ordered and stable DNS name that is unique within its cluster. When a Vertica pod fails, the rescheduled pod uses the same DNS name as its predecessor. If a pod needs to persist data between life cycles, you can mount a custom volume in its filesystem.

Rescheduled pods require information about the environment to become part of the cluster. This information is provided by the Downward API. Environment information, such as the superuser password Secret, is mounted in the /etc/podinfo directory.

Sidecar Container

Pods run multiple containers to tightly couple containers that contribute to the same process. The Vertica pod allows a sidecar, a utility container that can access and perform utility tasks for the Vertica server process.

For example, logging is a common utility task. Idiomatic Kubernetes practices retrieve logs from stdout and stderr on the host node for log aggregation. To facilitate this practice, Vertica offers the vlogger sidecar image that sends the contents of vertica.log to stdout on the host node.

If a sidecar needs to persist data, you can mount a custom volume in the sidecar filesystem.

For implementation details, see Creating a Custom Resource.

Persistent Storage

A pod is an ephemeral, immutable object that requires access to external storage to persist data between life cycles. To persist data, the operator uses the following API resource types:

- StorageClass: Represents an external storage provider. You must create a StorageClass object separately from your custom resource and set this value with the

local.storageClassNameconfiguration parameter. - PersistentVolume (PV): A unit of storage that mounts in a pod to persist data. You dynamically or statically provision PVs. Each PV references a StorageClass.

- PersistentVolumeClaim (PVC): The resource type that a pod uses to describe its StorageClass and storage requirements.

A pod mounts a PV in its filesystem to persist data, but a PV is not associated with a pod by default. However, the pod is associated with a PVC that includes a StorageClass in its storage requirements. When a pod requests storage with a PVC, the operator observes this request and then searches for a PV that meets the storage requirements. If the operator locates a PV, it binds the PVC to the PV and mounts the PV as a volume in the pod. If the operator does not locate a PV, it must either dynamically provision one, or the administrator must manually provision one before the operator can bind it to a pod.

PVs persist data because they exist independently of the pod life cycle. When a pod fails or is rescheduled, it has no effect on the PV. For additional details about StorageClass, PersistentVolume, and PersistentVolumeClaim, see the Kubernetes documentation.

StorageClass Requirements

The StorageClass affects how the Vertica server environment and operator function. For optimum performance, consider the following:

- If you do not set the

local.storageClassNameconfiguration parameter, the operator uses the default storage class. If you use the default storage class, confirm that it meets storage requirements for a production workload. - Select a StorageClass that uses a recommended storage format type as its

fsType. - Use dynamic volume provisioning. The operator requires on-demand volume provisioning to create PVs as needed.

Local Volume Mounts

The operator mounts a single PVC in the /home/dbadmin/local-data/ directory of each pod to persist data for the following subdirectories:

/data: Stores the catalog and any temporary files. There is a symbolic link to this path from thelocal.dataPathparameter value./depot: Improves depot warming in a rescheduled pod. There is a symbolic link to this path from thelocal.depotPathparameter value./config: A symbolic link to the/opt/vertica/configdirectory. This directory persists the contents of the configuration directory between restarts./opt/vertica/log: A symbolic link to theopt/vertica/logdirectory. This directory persists log files between pod restarts.

Kubernetes assigns each custom resource a unique identifier. The volume mount paths include the unique identifier between the mount point and the subdirectory. For example, the full path to the /data directory is /home/dbadmin/local-data/uid/data.

By default, each path mounted in the /local-data directory are owned by the dbadmin user and the verticadb group. For details, see About Linux Users Created by Vertica and Their Privileges.

Custom Volume Mounts

You might need to persist data between pod life cycles in one of the following scenarios:

- An external process performs a task that requires long-term access to the Vertica server data.

- Your custom resource includes a sidecar container in the Vertica pod.

You can mount a custom volume in the Vertica pod or sidecar filesystem. To mount a custom volume in the Vertica pod, add the definition in the spec section of the CR. To mount the custom volume in the sidecar, add it in an element of the sidecars array.

The CR requires that you provide the volume type and a name for each custom volume. The CR accepts any Kubernetes volume type. The volumeMounts.name value identifies the volume within the CR, and has the following requirements and restrictions:

- It must match the

volumes.nameparameter setting. - It must be unique among all volumes in the

/local-data,/podinfo, or/licensingmounted directories.

For instructions on how to mount a custom volume in either the Vertica server container or in a sidecar, see Creating a Custom Resource.

Service Objects

The Vertica Helm chart provides two service objects: a headless service that requires no configuration to maintain DNS records and ordered names for each pod, and a load balancing service that manages internal traffic and external client requests for the pods in your cluster.

Load Balancing Services

Each subcluster has its own load balancing service object. To configure the service object, use the subclusters[i].serviceType parameter in the custom resource to define a Kubernetes service type. Vertica supports the following service types:

- ClusterIP: The default service type. This service provides internal load balancing, and sets a stable IP and port that is accessible from within the subcluster only.

- NodePort: Provides external client access. You can specify a port number for each host node in the subcluster to open for client connections.

- LoadBalancer: Uses a cloud provider load balancer to create NodePort and ClusterIP services as needed. For details about implementation, see the Kubernetes documentation and your cloud provider documentation.

To prevent performance issues during heavy network traffic, Vertica recommends that you set --proxy-mode to iptables for your Kubernetes cluster.

Because native Vertica load balancing interferes with the Kubernetes service object, Vertica recommends that you allow the Kubernetes services to manage load balancing for the subcluster. You can configure the native Vertica load balancer within the Kubernetes cluster, but you receive unexpected results. For example, if you set the Vertica load balancing policy to ROUNDROBIN, the load balancing appears random.

For additional details about Kubernetes services, see the official Kubernetes documentation.

Security Considerations

Vertica on Kubernetes supports both TLS and mTLS for communications between resource objects. You must manually configure TLS in your environment. For details, see TLS Protocol.

Admission Controller Webhook TLS Certificates

The VerticaDB operator Helm chart includes the admission controller webhook to validate changes to a custom resource. The webhook requires TLS certificates for data encryption.

The method that you use to install the VerticaDB operator determines how you manage the TLS certificates for the admission controller. If you use the Operator Lifecycle Manager (OLM) and install the VerticaDB operator through OperatorHub.io, the OLM creates and mounts a self-signed certificate for the webhook, which requires no additional action. If you install the VerticaDB operator with the Helm charts, you must manually manage admission controller TLS certificates with one of the following options:

- cert-manager. This Kubernetes add-on generates and manages certificates for your resource objects. It has built-in support in Kubernetes. For installation details, see Installing the VerticaDB Operator.

-

Custom certificates. To use custom certificates, you must pass your certificate credentials and a PEM-encoded CA bundle as parameters to the Helm chart. For details, see Installing the VerticaDB Operator and Helm Chart Parameters.

By default, a Helm chart install uses cert-manager unless you provide custom certificates. If you do not install cert-manager or provide custom certificates to the Helm chart, you receive an error when you install the Helm chart.

Custom TLS Certificates

Your custom resource might require several custom TLS certificates to secure internal and external communications. For example, you might require a self-signed certificate authority (CA) bundle to authenticate to a supported storage location, and multiple certificates for different client connections. You can mount one or more custom certificates in the Vertica server container with the certSecrets custom resource parameter. Each certificate is mounted in the container at /certs/cert-name/key.

The operator manages changes to the certificates. If you update an existing certificate, the operator replaces the certificate in the Vertica server container. If you add or delete a certificate, the operator reschedules the pod with the new configuration.

For details about adding custom certificates to your custom resource, see Creating a Custom Resource.

System Configuration

As a best practice, make system configurations on the host node so that pods inherit those settings from the host node. This strategy eliminates the need to provide each pod a privileged security context to make system configurations on the host.

To manually configure host nodes, refer to the following sections:

- General Operating System Configuration - Automatically Configured by the Installer

- General Operating System Configuration - Manual Configuration

The dbadmin account must use one of the authentication techniques described in dbadmin Authentication Access.