Low-Latency Optimizations

Vertica and Low-Latency Applications

Vertica is typically used for analytic queries that have a long run time. However, you can also use Vertica to handle low-latency applications with queries that typically run in under ten seconds. Low-latency queries are usually used in cases where users need a real-time or near real-time response, such as users with dashboard applications.

Typically, low latency applications also have high concurrency requirements. In such cases, throughput of a Vertica cluster (measured as queries per second) is the metric you should maximize.

Follow the recommendations in this document to optimize your particular use case.

Platform and Hardware Recommendations

For low-latency applications, use bare-metal or physical hardware rather than a virtual machine. Virtual machines and cloud platforms are not ideal platforms for low-latency applications. The virtualization software adds another layer in the software stack required to run Vertica. Each layer in that stack adds latency. Therefore, the fewer layers in your stack, the better.

To achieve low latency, use the fewest number of nodes possible, with a minimum of three nodes to maintain fault tolerance.

For more information about VMWare and tuning latency-sensitive workloads, refer to Best Practices for Performance Tuning of Latency-Sensitive Workloads in vSphere VMs.

Storage Recommendations

Use enterprise grade direct attached storage instead of storage area network (SAN). Doing so usually results in faster data retrieval. Using a SAN may add latency to a query.

Set Up Hardware for Low-Latency Applications

For bare-metal implementations, configure the BIOS on a Windows operating system for maximum performance, as described in the following steps:

- Navigate to System Configuration > BIOS/Platform Configuration (RBSU) > Power Management > HP Power Profile.

- Select Maximum Performance.

- In the

/etc/grub.conffile, modify the kernel entry by appending the following in the command line:

Intel_idle.max_cstate=0 processor.max_cstate=0.

For more information about hardware configuration, see the Vertica Hardware Guide.

Recommendations for Using Vertica on Amazon Web Services (AWS)

Do not run Vertica on cloud platforms for low- latency applications. The following are some best practices you should follow if you choose a cloud platform such as AWS.

AWS provides two storage options:

- Elastic Block Store (EBS)

- Ephemeral storage

EBS storage, like SAN storage, is not local, while ephemeral storage is local to an instance. You should use ephemeral storage for low-latency applications. Writing or reading data from ephemeral storage is faster than EBS storage because the storage is closer to the instance. For more information about AWS best practices, see the Vertica on Amazon Web Services Guide.

While the AWS best practices suggest that users use an 8-disk, EBS software Raid-0 array, this is not ideal for low-latency queries. Users achieve lower latency with a single EBS volume.

Use the following storage types, in priority order:

- Ephemeral storage

- Single EBS volume

- 8-disk, EBS software Raid-0 array

vnetperf Utility

Run the vnetperf utility to measure the network performance of your hosts. This utility lets you measure network latency. For low-latency queries, the maximum RTT (round-trip time) latency is 200 microseconds. You can minimize the RTT latency by minimizing the number of network hops.

An example of the vnetperf utility is as follows:

test | date | node | index | rtt latency (us) | clock skew (us) latency| 2015-06-18_11:33:28,873| 10.54.10.227| 1 | 182 | 55 latency| 2015-06-18_11:33:28,873| 10.54.10.228| 2 | 183 | -98

For more information see vnetperf utility, in the Vertica documentation.

Single Node Queries

You can design your workload to use single-node queries that help achieve low-latency. To use single-node queries, you must design projections and query predicates in a distinct way. For more information, see Routing JDBC Queries Directly to a Single Node in the Vertica documentation.

If you are using single-node queries, the recommendation for using the fewest number of nodes possible does not apply.

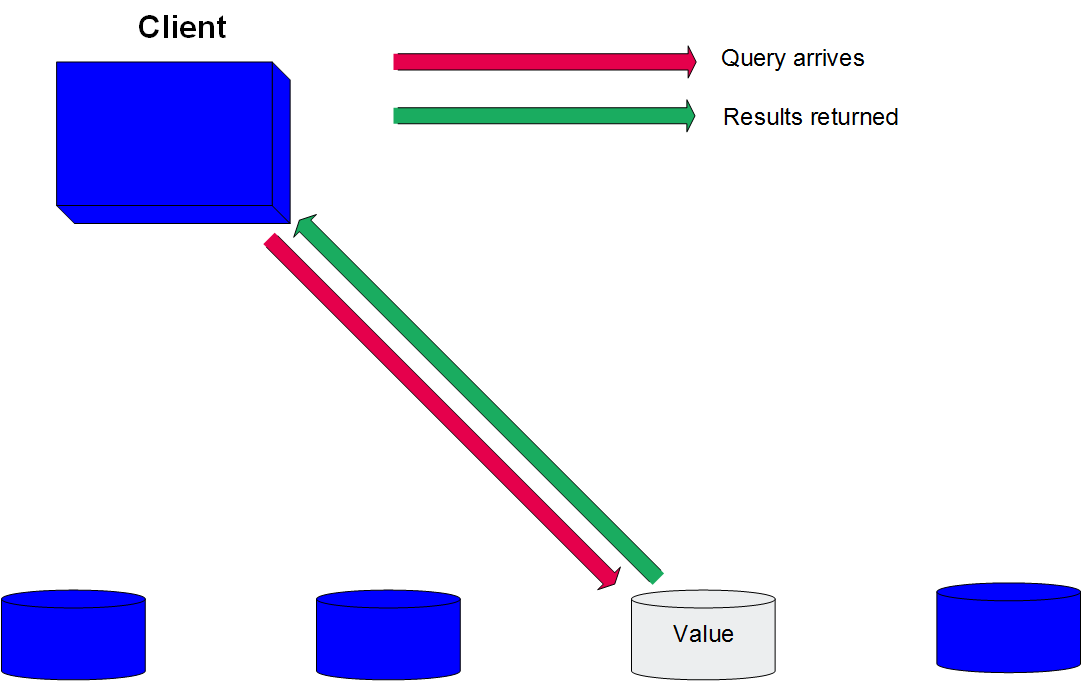

The following graphic shows how you can efficiently execute a query on a single-node that contains the data you need.

Certain query types have different specifications than those recommended for single-node queries. For more information about these query types, see The Benefits of Single Node Queries in the Vertica Community.

Optimize Queries

You can optimize queries to achieve lower latency using any of these approaches:

- Use the WHERE clause. The sort order of the projection must match the WHERE clause. For more information see Choosing Sort Order: Best Practices, in the Vertica documentation.

- Use the appropriate segmentation in the projection definition clause to minimize network traffic between nodes and so that all nodes are equally loaded.

- Use live aggregate projections wherever you need large aggregations (GROUP BY) in the query. For more information, see Creating Live Aggregate Projections in the Vertica documentation.

- Denormalize your schema to optimize the join performance of the database. Denormalization is not ideal in all cases because it increases the storage footprint. However, it does eliminates the need for a join, which lowers latency.

For more information, see Optimizing Query Performance in the Vertica documentation.

Application Configuration

You can configure your Vertica database to achieve lower latency using the following approaches:

- Use JDBC connection pools to reduce the overhead of repeatedly opening network connections between the client and the server. Opening a new connection for each request takes time and is costly. When a request comes in, Vertica assigns the request to a pre-existing connection in the pool. If there are no free connections, Vertica creates a new connection. When the request completes, the connection returns to the pool, awaiting another request. Using JDBC connection pools eliminates the time it takes to authenticate a connection every time a new request is made. This lowers the latency of the query because it reduces the amount of time it takes to connect.

- If you have an intermediate firewall or load-balance device that terminates the idle TCP connection, disable the idle connection termination. Firewalls or other network hardware sit in between the client and the database add latency.

- Load data during off hours or during non-peak load times. After a data load, run the ANALYZE_STATISTICS function to keep table statistics up-to-date. Running data loads during off hours prevents interference with low-latency queries.

- Run updates, deletes, and ETL jobs when low-latency queries are not running.

- Use native connection load balancing to evenly distribute data across all the nodes in your cluster. Doing so helps prevent hosts from being burdened by a disproportionate number of client connections. For more information, see About Native Connection Load Balancing in the Vertica documentation.

Resource Manager

The Resource Manager controls system resources to deliver stable and predictable results. The Resource Manager uses resource pools to flexibly manage the resources available to a query.

Resource Manager Parameters

Using many threads for queries is not optimal for achieving low-latency. Some low-latency queries that return few rows can perform better with less parallelism (fewer threads). The EXECUTIONPARALLELISM configuration parameter controls the number of threads used to process any single query.

You can also lower latency by limiting the amount of memory each query is assigned. You can use this approach generally for queries with hash operators, such as JOIN and GROUPBY. The PLANNEDONCURRENCY configuration parameter controls the query memory budget. You should give a query the exact amount of memory it needs for ideal performance. You can calculate how much memory is needed for low-latency queries. Then, set the resource pool memory as accurately as possible within a small band. For an example of setting these values, see Scenario: The CEO Query in the Vertica documentation.

If you are working with CPU-bound workloads, you should set a hard upper limit on concurrency. This limit should be equal to or less than the number of processors on your Vertica node. Adjust the values of EXECUTIONPARALLELISM and PLANNEDCONCURRENCY so that the product of the two values is equal to or less than the number of physical CPUs available. For an example of setting a hard limit on concurrency, see Scenario: Setting a Hard Limit on Concurrency For An Application.

Manage Memory

The MEMORYSIZE value indicates the minimum amount of memory available to that pool. You should set the value as close to the actual amount of memory needed. You can also set the MAXMEMORYSIZE value to indicate the maximum size a resource pool could grow by borrowing memory from the GENERAL pool. You should set both the MEMORYSIZE and MAXMEMORYSIZE values in as close a range as possible to the actual memory needed by the low-latency queries.

In some situations, two queries both need memory or other resources. For best results, you should assign the low-latency query to the resource pool with higher priority. For an example of how to do so, see Scenario: Setting Priorities on Queries Issued By Different Users in the Vertica documentation.

In Vertica, queries receive the highest nice priority during the first two seconds of execution. You can change the two-second priority time by changing the RUNTIMEPRIORITYTHRESHOLD of the resource pool in which the low-latency queries run. However, do so only for low-latency queries; Do not change the RUNTIMEPRIORITYTHRESHOLD in other scenarios.

Example: Resource Tuning

The following is an example of the typical process used to tune low latency, using the concepts discussed in this document. To tune correctly, you should create a synthetic benchmark, so that at each step in the process, you can validate the effect of the tuning parameter on the query benchmark.

Consider the following schema. The meter_data table contains a point in time for a residential customer’s electricity usage.

DROP TABLE meter_data cascade; CREATE TABLE meter_data ( data_id int NOT NULL, meter_id char(32) NOT NULL, reading_ts timestamp NOT NULL, reading_value float NOT NULL ) ; CREATE PROJECTION meter_data_p1 ( data_id, meter_id, reading_ts, reading_value ) AS SELECT data_id, meter_id, reading_ts, reading_value FROM meter_data ORDER BY reading_ts SEGMENTED BY HASH(meter_id) ALL NODES KSAFE 1; /* COPY meter_data FROM '/home/skeswani/mem_mgmt/data1.txt.bz2' BZIP; COPY meter_data FROM '/home/skeswani/mem_mgmt/data2.txt.bz2' BZIP; COPY meter_data FROM '/home/skeswani/mem_mgmt/data3.txt.bz2' BZIP; */

The following is the initial setup needed for the resource tuning example:

DROP RESOURCE POOL dashboard_pool; CREATE RESOURCE POOL dashboard_pool; CREATE USER dashboard_user; GRANT USAGE ON RESOURCE POOL dashboard_pool TO dashboard_user; ALTER USER dashboard_user RESOURCE POOL dashboard_pool; GRANT ALL on meter_data TO dashboard_user;

The following query is run from a web dashboard. This query displays the highest meter reading for a given day, and renders a graph showing peak use for that day and time.

SELECT reading_ts, reading value FROM meter_data WHERE reading_ts between '2005-05-01' and '2005-05-02' ORDER BY reading_value desc limit 1;

The following is a synthetic concurrent query benchmark used to validate the resource pool tuning in this example. The benchmark runs 15 queries simultaneously, as a web dashboard would. As the resource pool is tuned, you should rerun the benchmark following each change, to verify that the total duration to run the workload is better with each step. Duration is measured as the time between the start of the first query and the end of the last query.

[username@ip-address mem_mgmt]$ cat benchmark.sh

#!/bin/bash

vsql -U dashboard_user -c "select /* +label(dashboard) */ reading_ts, reading_value from meter_data where reading_ts between '2005-05-01' and '2005-05-02' order by reading_value desc limit 1;" &

vsql -U dashboard_user -c "select /* +label(dashboard) */ reading_ts, reading_value from meter_data where reading_ts between '2005-05-02' and '2005-05-03' order by reading_value desc limit 1;" &

vsql -U dashboard_user -c "select /* +label(dashboard) */ reading_ts, reading_value from meter_data where reading_ts between '2005-05-03' and '2005-05-04' order by reading_value desc limit 1;" &

vsql -U dashboard_user -c "select /* +label(dashboard) */ reading_ts, reading_value from meter_data where reading_ts between '2005-05-04' and '2005-05-05' order by reading_value desc limit 1;" &

vsql -U dashboard_user -c "select /* +label(dashboard) */ reading_ts, reading_value from meter_data where reading_ts between '2005-05-05' and '2005-05-06' order by reading_value desc limit 1;" &

vsql -U dashboard_user -c "select /* +label(dashboard) */ reading_ts, reading_value from meter_data where reading_ts between '2005-05-06' and '2005-05-07' order by reading_value desc limit 1;" &

vsql -U dashboard_user -c "select /* +label(dashboard) */ reading_ts, reading_value from meter_data where reading_ts between '2005-05-07' and '2005-05-08' order by reading_value desc limit 1;" &

vsql -U dashboard_user -c "select /* +label(dashboard) */ reading_ts, reading_value from meter_data where reading_ts between '2005-05-08' and '2005-05-09' order by reading_value desc limit 1;" &

vsql -U dashboard_user -c "select /* +label(dashboard) */ reading_ts, reading_value from meter_data where reading_ts between '2005-05-09' and '2005-05-10' order by reading_value desc limit 1;" &

vsql -U dashboard_user -c "select /* +label(dashboard) */ reading_ts, reading_value from meter_data where reading_ts between '2005-05-10' and '2005-05-11' order by reading_value desc limit 1;" &

vsql -U dashboard_user -c "select /* +label(dashboard) */ reading_ts, reading_value from meter_data where reading_ts between '2005-05-11' and '2005-05-12' order by reading_value desc limit 1;" &

vsql -U dashboard_user -c "select /* +label(dashboard) */ reading_ts, reading_value from meter_data where reading_ts between '2005-05-12' and '2005-05-13' order by reading_value desc limit 1;" &

vsql -U dashboard_user -c "select /* +label(dashboard) */ reading_ts, reading_value from meter_data where reading_ts between '2005-05-13' and '2005-05-14' order by reading_value desc limit 1;" &

vsql -U dashboard_user -c "select /* +label(dashboard) */ reading_ts, reading_value from meter_data where reading_ts between '2005-05-14' and '2005-05-15' order by reading_value desc limit 1;" &

vsql -U dashboard_user -c "select /* +label(dashboard) */ reading_ts, reading_value from meter_data where reading_ts between '2005-05-15' and '2005-05-16' order by reading_value desc limit 1;" &

wait

SELECT count(*),

min(start_timestamp),

max(end_timestamp),

(max(end_timestamp)-min(start_timestamp))SECOND(3) duration

FROM query_requests WHERE request_label='dashboard';

count | min | max | duration

-------+-------------------------------+-------------------------------+----------

15 | 2015-11-12 11:36:45.062353-05 | 2015-11-12 11:36:46.425296-05 | 1.363The following tuning adjustments were made in the example:

- The projection was optimized to run faster with EXECUTIONPARELLELISM=1:

ALTER RESOURCE POOL dashboard_pool EXECUTIONPARALLELISM 1;

- Since the dashboard displayed peak usage for 15 days at a time, it was expected that we could run 15 queries at a time and expect a fast response:

ALTER RESOURCE POOL dashboard_pool PLANNEDCONCURRENCY 15;

- The query was profiled to see how much memory it needed:

Profile select reading_ts, reading value from meter data where reading_ts between '2005-05-13' order by reading_value desc limit 1; NOTICE 4788: Statement is being profiled HINT: Select * from v_monitor.execution_engine_profiles where transaction_id=45035996273715510 and statement_id=1; NOTICE 3557: Initiator memory for query: [on pool general: 127093 KB, minimum: 127093 KB] NOTICE 5077: Total memory required by query: [127093 KB]

The query used 128 MB of memory, so the memory range minimum was allocated at 128MB, with an upper limit of 256MB. However, the query could borrow more from the general pool, if needed. The MEMORYSIZE and MAXMEMORYSIZE values are calculated by multiplying the memory size of the query by the planned concurrency (128x15 and 256x15, respectively).

ALTER RESOURCE POOL dashboard_pool MEMORYSIZE '1920M'; ALTER RESOURCE POOL dashboard_pool MAXMEMORYSIZE '3840M';

- The resource pool was given the highest possible priority.

ALTER RESOURCE POOL dashboard_pool PRIORITY 100;

- For this example, there were 32 CPUs on the node. Limit the MAXCONCURRENCY so that the CPUs are not overloaded.

ALTER RESOURCE POOL dashboard_pool MAXCONCURRENCY 32;

Other Recommended Tasks

Perform the following tasks for best results with low-latency applications:

- For queries that use the same view multiple times, materialize the views using the WITH clause. This approach may help improve low-latency.

- Rewrite queries to flatten them. Low-latency queries perform better if they do not contain subqueries. Vertica attempts to flatten these queries. Look at the query plan to see if the query was flattened. If the query was not flattened, rewriting the query and flattening it might help achieve low-latency.

- Keep the statistics on your table up-to-date. Accurate statistics allow the Optimizer to pick the best plan for the query.