Shards and Subscriptions

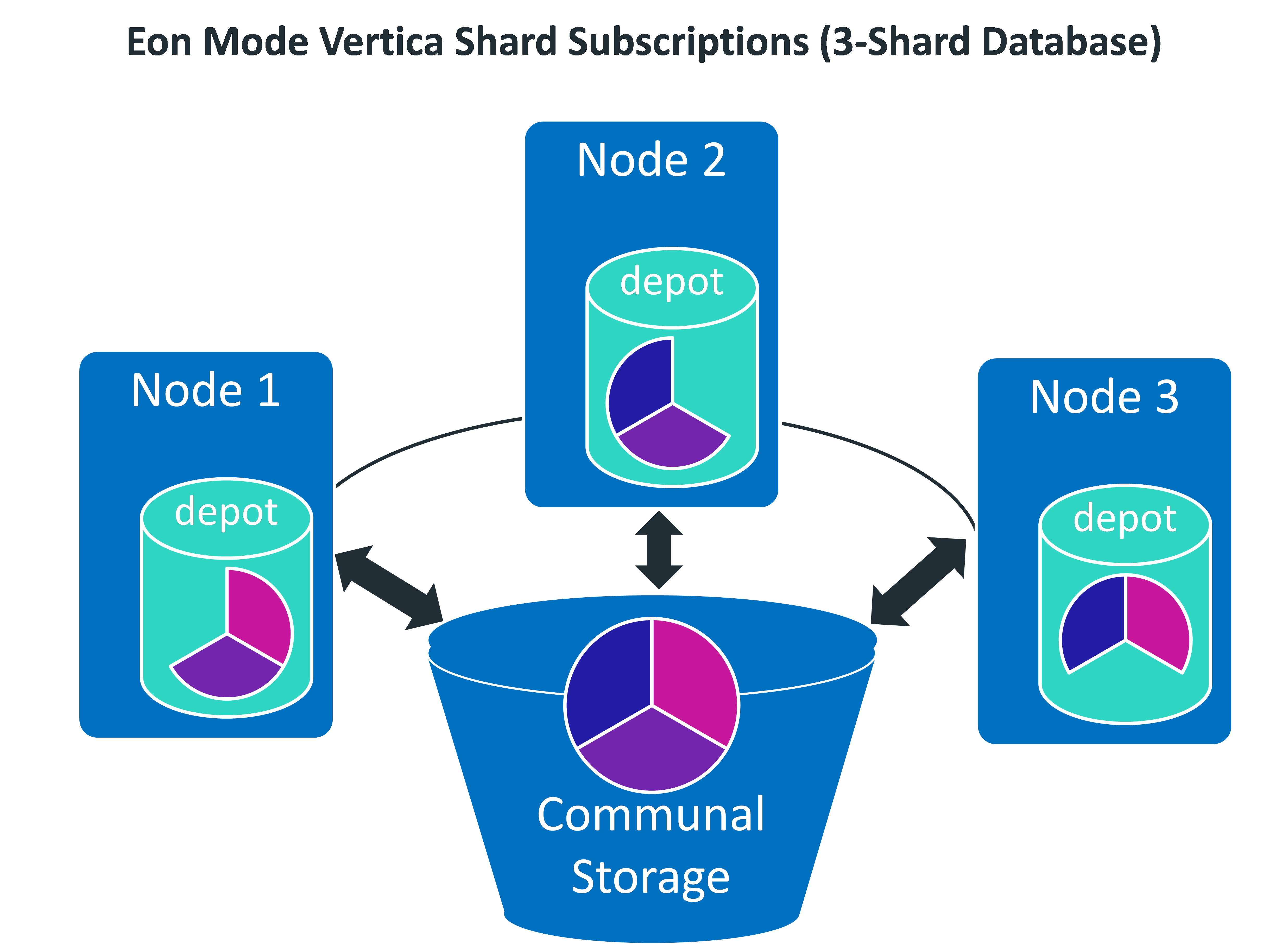

In Eon Mode, Vertica stores data communally in a shared data storage location (for example, in S3 when running on AWS). All nodes are capable of accessing all of the data in the communal storage location. In order for nodes to divide the work of processing queries, Vertica must divide the data between them in some way. It breaks the data in communal storage into segments called shards. Each node in your database subscribes to a subset of the shards in the communal storage location. When K-Safety is 1 or higher (high availability), each shard has more than one node subscribing to it. This redundancy ensures that if a node goes down or is being used in another query, all the data is available on the remaining nodes to process queries.

If all of the primary nodes that subscribe to a shard go down, your database shuts down to maintain data integrity. Any node that is the sole subscriber to a shard is a critical node. If that node goes down, then your database shuts down.

The shards in your communal storage location are similar to a collection of segmented projections in an Enterprise Mode database.

A special type of shard called a replica shard stores metadata for unsegmented projections. Replica shards exist on all nodes.

Each shard, including a replica shard, has exactly one subscribed node marked as the primary subscriber. The primary subscriber node runs tuple mover jobs on the shard. These jobs manage the storage containers holding the data in the shard. See Tuple Mover Operations for more information about these operations.

You define the number of shards at the time you create your database. For the best performance, the number of shards you choose should be no greater than 2× the number of nodes. At most, you should limit the shard-to-node ratio to no greater than 3:1. MC warns you to take all aspects of shard count into consideration. Once set, the shard count cannot be changed.

When the number of nodes is greater than the number of shards (with ETS), the throughput of dashboard queries improves. See Expanding Your Cluster below for important information or organizing your nodes when you have more nodes than shards. When the number of shards exceeds the number of nodes, you can expand your cluster in the future to improve the performance of long analytic queries.

For efficiency, Vertica transfers metadata about shards directly between database nodes. This peer-to-peer transfer applies only to metadata; the actual data that is stored on each node gets copied from communal storage to the node's depot as needed.

Expanding Your Cluster

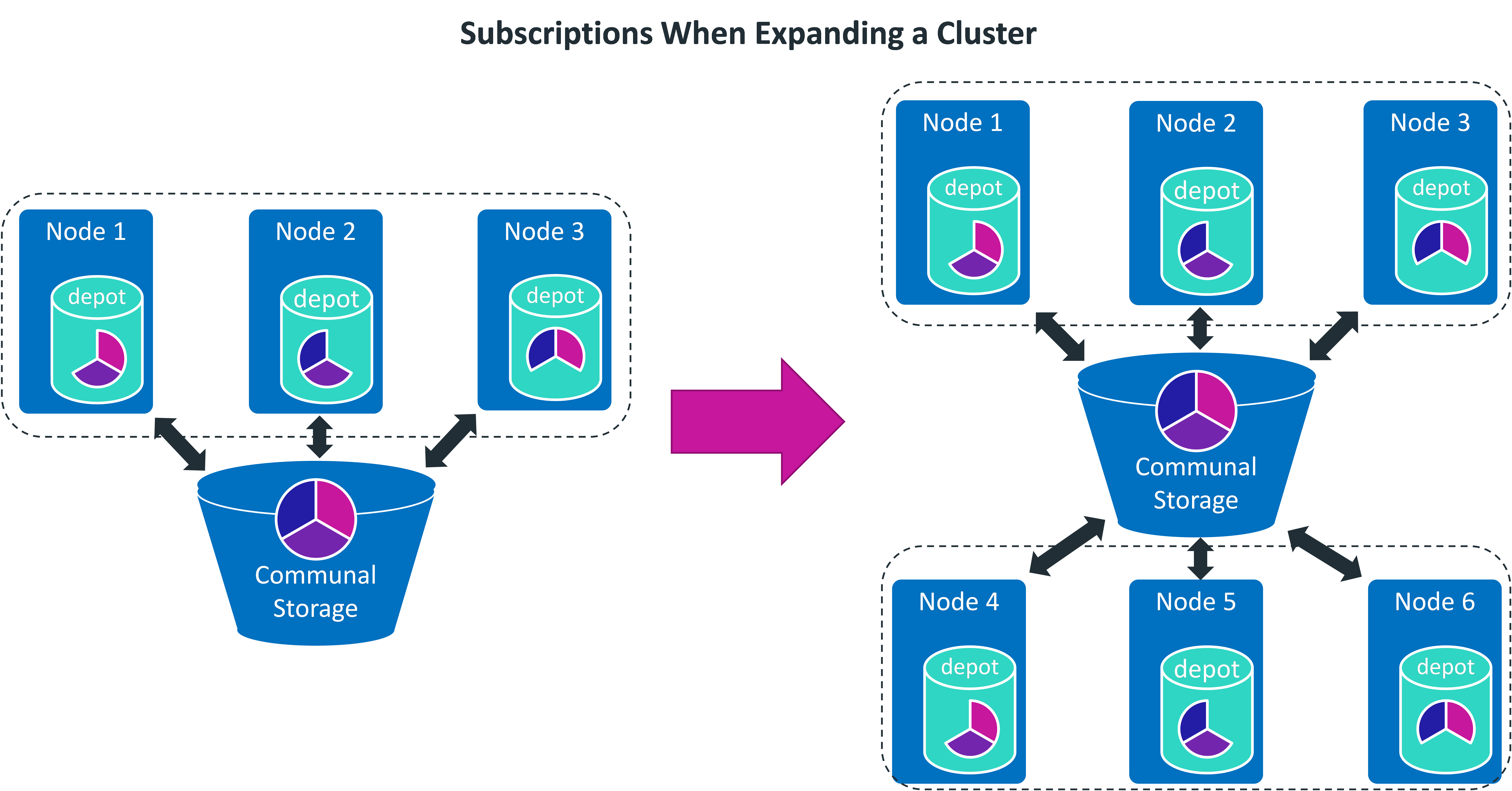

This diagram shows the process of expanding a 3-node 3-shard database to 6 nodes. To ensure maximum performance, the three new nodes are added to a new subcluster (see Subclusters for details). The number of shards stays the same, and the additional nodes gain subscriptions to the shards. After adding nodes to your cluster with admintools, you must rebalance the shards.

If you scale down your cluster, Vertica rebalances the data automatically. However, when you scale up your cluster, you must start the rebalance operation manually.