Containerized Vertica on Kubernetes

Beta Feature — For Test Environments Only

Kubernetes is an open-source container orchestration platform that enables you to automate deployment, service management, and task monitoring for containerized applications at scale. You provide a description of your desired environment, and Kubernetes allocates predefined resources and handles their utilization. This decouples your applications from the underlying infrastructure, allowing you to develop and deploy applications by describing the resources that your applications require, rather than configuring access to specific resources with each change in your environment.

Vertica supports the Eon Mode database in a Kubernetes StatefulSet.

Vertica Eon Mode on Kubernetes Architecture

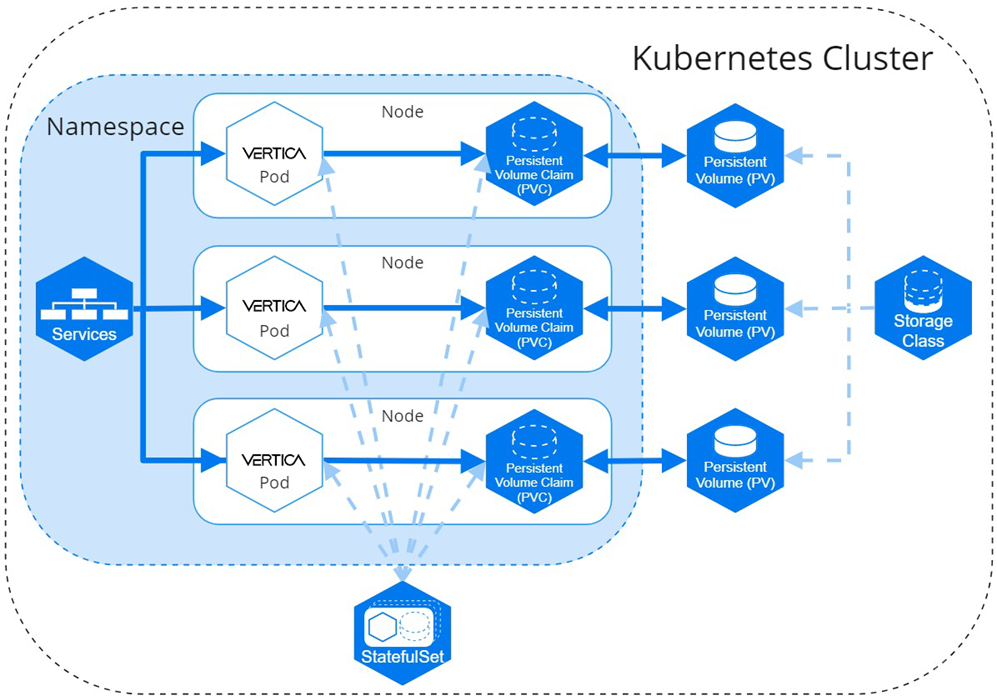

Vertica provides official Helm charts to deploy an Eon Mode database as a StatefulSet on a Kubernetes cluster. Helm is a package manager that helps you define and maintain your Kubernetes workload resources. StatefulSets are a type of Kubernetes workload resource where each pod has a unique identity and state. When a pod fails or is rescheduled, the replacement pod is assigned the identity and state of the predecessor pod.

The following diagram describes the Vertica StatefulSet Helm chart architecture:

Vertica Pod

A pod consists of one or more logically grouped containers that consume the resources of their host node. It is easiest to think of a pod as a wrapper around the Vertica process and its environment. Generally, pods have more than one container when the containers contribute to the same environment or process. Each Vertica pod uses a configinit and server container:

- configinit: an init container that bootstraps the Vertica environment. This container configures the

/opt/vertica/configdirectory that persists local storage and handles pod restarts. During a pod restart, the host IP addresses in the/opt/vertica/config/admintools.conffile and/opt/vertica/configdirectory are updated when the database is UP. If the database is DOWN, these values are updated when the database is UP again. For details about pod restarts, see Restarting a Node with New Host IPs.

The configinit container must successfully run to completion before the server container starts. - server: the long-lived container that runs the Vertica processes. If this container stops and the cluster is UP, the pod tries to restart the process. If the cluster is DOWN, Kubernetes reschedules the pod and you must manually start the database with admintools and the start_db option.

When a pod fails or is rescheduled, StatefulSets use DNS names to replace the pod. Pods are assigned ordered and stable DNS names that are unique within the StatefulSet. When a pod containing a Vertica environment goes down, the pod is rescheduled using the same DNS name as the previous pod.

When pods are rescheduled, they require information about the environment to become part of the cluster. This information is provided by a combination of the Downward API, Secrets, Helm chart configuration parameters, and the ConfigMap. Any information that is not provided to each Vertica container with environment variables or the Helm chart is provided as a mounted volume in the /etc/podinfo directory.

Persistent Storage

The StatefulSet provides each pod with a PersistentVolumeClaim (PVC), which requests storage from the PersistentVolume (PV). As a best practice, the StatefulSet does not create the PV because it requires access privileges.

You must set the persistentVolumeClaim.storageClassName configuration parameter to ensure that the PVC gets mapped to the correct StorageClass. If you do not configure this field, the default StorageClass is used. If you do not have a default StorageClass configured in Kubernetes, the pods in the StatefulSet do not advance past the Pending phase.

Each pod mounts a single PersistentVolumeClaim (PVC) in the /home/dbadmin/local-data directory to persist data for the following subdirectories:

/data: Stores the catalog, and is persisted to help depot warming after a pod rescheduling. When creating a database, use this as the data directory./depot: When creating a database, use this as the depot. This directory is persisted to help depot warming after a pod rescheduling./config: A symbolic link to the/opt/vertica/configdirectory. During initial deployment, the configinit container populates this directory with the necessary files. These files are used during the automated re-ip operation to reschedule pods./opt/vertica/log: A symbolic link to theopt/vertica/logdirectory. This directory persists log files between pod restarts.

For additional details about PersistentVolumes, PersistentVolumeClaims, and StorageClasses, see the official Kubernetes documentation.

MinIO provides an operator for testing your on-premises communal storage.

Service Objects

The Vertica helm chart provides the following two service objects:

- Load balancing service that provides a single static cluster IP address and port for client access. This service distributes client requests among the pods in your Kubernetes cluster.

- Headless service that maintains DNS records and ordered names for each pod.

For additional details about Services, see the official Kubernetes documentation.

Security Considerations

As a best practice, make system configurations on the host node so that pods can inherit those settings from the node. This means that you do not have to provide each pod a privileged security context to make system configurations on the host.

Vertica is installed in each pod with the --no-system-configuration option to prevent pods from making configuration changes. To run Vertica optimally, manually configure host nodes using the settings found in the following sections:

- General Operating System Configuration - Automatically Configured by the Installer

- General Operating System Configuration - Manual Configuration

Vertica on Kubernetes supports both TLS and mTLS for internal communications between pods in your cluster. You must manually configure TLS in your environment, including the following security parameters:

- EnableSSL

- SSLPrivateKey

- SSLCertificate

- SSLCA (optional)

For details, see TLS Protocol.

The dbadmin account must use one of the authentication techniques described in dbadmin Authentication Access.

Managing Internal and External Workloads

Currently, the Vertica StatefulSet uses only a single external service object. All external client requests are sent through this service object and load balanced among the pods in the cluster.

Subclusters

Because a single service handles all external requests, you can direct a workload to a specific subcluster only from within the Kubernetes subcluster. To direct a workload from within Kubernetes, you must know the fully qualified domain name (FQDN) for the pod.

Import and Export

Importing and exporting data between a cluster outside of Kubernetes requires that you expose the service with the NodePort or LoadBalancer service type and properly configure the network.

When configuring the network to import or export data, you must assign each node a static IP export address. When pods are rescheduled to different nodes, you must update the static IP address to reflect the new node.

See Configuring the Network to Import and Export Data for more information.