The recent DBTA Data Summit provided a lot to think about. I did a short talk in the “Analytics in Action” track about how data analysts, architects and engineers can turn the endless waves of disruption we keep getting hit with into opportunities to boost bottom line. There were some very cool talks by other folks as well. For me, the highlights of the conference were Michael Stonebraker’s keynote, and the Data Kitchen folks diving into the principles of DataOps. The main theme of the conference seemed to be finding better ways to get quality data to the people who need it.

Michael Stonebraker’s 800 Pound Gorilla

Michael Stonebraker, one of the founders of Vertica and a Turing Award winner, kicked off the event with an interesting overview of all the different ways we manage data, as well as where data management is headed and why. He had a running theme of there being an 800 pound gorilla in the corner somewhere that we couldn’t properly handle, even with all our evolving data management strategies.

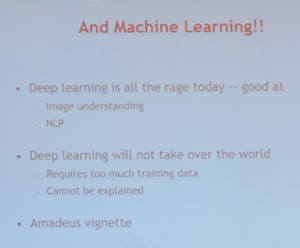

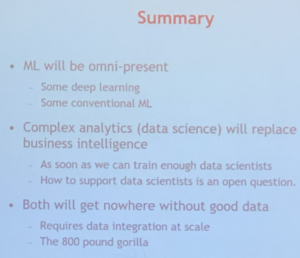

Michael Stonebraker, one of the founders of Vertica and a Turing Award winner, kicked off the event with an interesting overview of all the different ways we manage data, as well as where data management is headed and why. He had a running theme of there being an 800 pound gorilla in the corner somewhere that we couldn’t properly handle, even with all our evolving data management strategies.He had us all waiting in suspense as he discussed the history of databases and data management. From data warehouses to machine learning, as well as touching on some of the hottest topics in analytics. Each subject had a flaw, often a point about why it wasn’t going to become the one and only way to manage or analyze data. Every strategy has its strengths, but each has its weaknesses as well. He told various stories from his decades in the business to illustrate both. In particular, he expressed the opinion that MapReduce was passé, which I can’t argue with, and Oracle worked best as slideware. Ouch.

In the end, the gorilla Stonebraker felt we hadn’t tamed yet wasn’t deep learning, or high velocity data, but the vast varieties of data that were proliferating in the market faster than we can keep up. He also put a lot of emphasis on the fact that data scientists spend WAY too much time finding, cleaning, and checking data sets, a point that was made again and again in many presentations during the conference. Breaking down silos, integrating data, and scrubbing it for quality are still the challenge a lot of businesses have failed to conquer.

In the end, the gorilla Stonebraker felt we hadn’t tamed yet wasn’t deep learning, or high velocity data, but the vast varieties of data that were proliferating in the market faster than we can keep up. He also put a lot of emphasis on the fact that data scientists spend WAY too much time finding, cleaning, and checking data sets, a point that was made again and again in many presentations during the conference. Breaking down silos, integrating data, and scrubbing it for quality are still the challenge a lot of businesses have failed to conquer.If you ever get a chance to hear this man speak, he’s definitely a “wake your brain up” kind of speaker.

I have to say I’m delighted to see that the consensus has shifted to recognize the continued need for quality data. For a while there, I felt like I was shouting into the wilderness when advocating data quality in the modern age. In my opinion, big data analytics doesn’t make data quality less important, it makes it more important.

Paige Roberts’ Waves of Disruption

My session was standing room only, and I’d love to say they were all there to hear me pontificate, but Badal Shah, Director of Development at Fanny Mae had the slot just before me talking about the evolution of their enterprise data strategy.

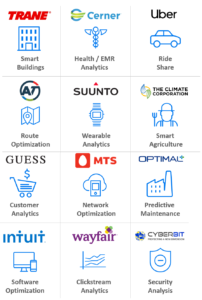

Everyone stayed for my talk, though, as we had a very similar message: How to help your organization become more data driven. I talked about the technological disruptions that keep giving data architects and managers nightmares, and how they can be turned into advantages. Each point was anchored by starting with a concrete example of a company that did exactly that.

Everyone stayed for my talk, though, as we had a very similar message: How to help your organization become more data driven. I talked about the technological disruptions that keep giving data architects and managers nightmares, and how they can be turned into advantages. Each point was anchored by starting with a concrete example of a company that did exactly that.All those stories were gleaned from Vertica’s impressive list of customer case studies. We have a lot of customers out there doing some remarkable things in a wide variety of industries. When I heard names like Uber and Twitter and Etsy as our customers, not to mention the ones I’m not allowed to say publicly, I knew this tech had something serious going for it. Breaking that down into what key capabilities help our users get happy and wealthy from what many companies see as a threat and a burden, that makes the information something anyone can use.

If you’re interested in learning more, they didn’t record the presentations, but my slides, Badal Shah’s, and most of the other presenters’ slides are here: http://www.dbta.com/DataSummit/2019/Presentations.aspx

Christopher Bergh of Data Kitchen’s Data Ops Cookbook

Christopher Bergh, the CEO and Head Chef at Data Kitchen gave the other presentation that really stuck with me. DataOps is gaining a lot of traction. It combines principles of DevOps, Agile development and Lean Manufacturing, in order to tackle that crazy statistic about data scientists spending the majority of their time trying to get good data sets.

Christopher Bergh, the CEO and Head Chef at Data Kitchen gave the other presentation that really stuck with me. DataOps is gaining a lot of traction. It combines principles of DevOps, Agile development and Lean Manufacturing, in order to tackle that crazy statistic about data scientists spending the majority of their time trying to get good data sets.Everyone needs good data sets, and as Michael Stonebraker pointed out, data sets are proliferating, becoming even more diverse and hard to manage over time. The principles and processes in DataOps make an awful lot of sense. I’ve seen several different strategies to get clean, trustworthy datasets into the hands of the people who need them, and this one seems like the most sustainable and scalable.

I snagged my own copy of the DataOps cookbook while I was there. It makes some very valid points. I have to say that sometimes just having a plan, a way forward, can be a huge advantage in itself.

Becoming Data Driven Requires … Data

The disruptive changes I talked about in my presentation are making data management harder and harder. But those are the same disruptions that are allowing us to make major leaps forward in advanced analytics. They’re the same changes in the data landscape that are making it more and more possible for nearly every job in a company to be data-driven. DataOps as a strategy could really help a lot of companies ride those waves of disruption, tame this 800 pound gorilla, and provide data where its really needed.

Related Resource:

Check out Michael Stonebraker’s book: “Making Databases Work”